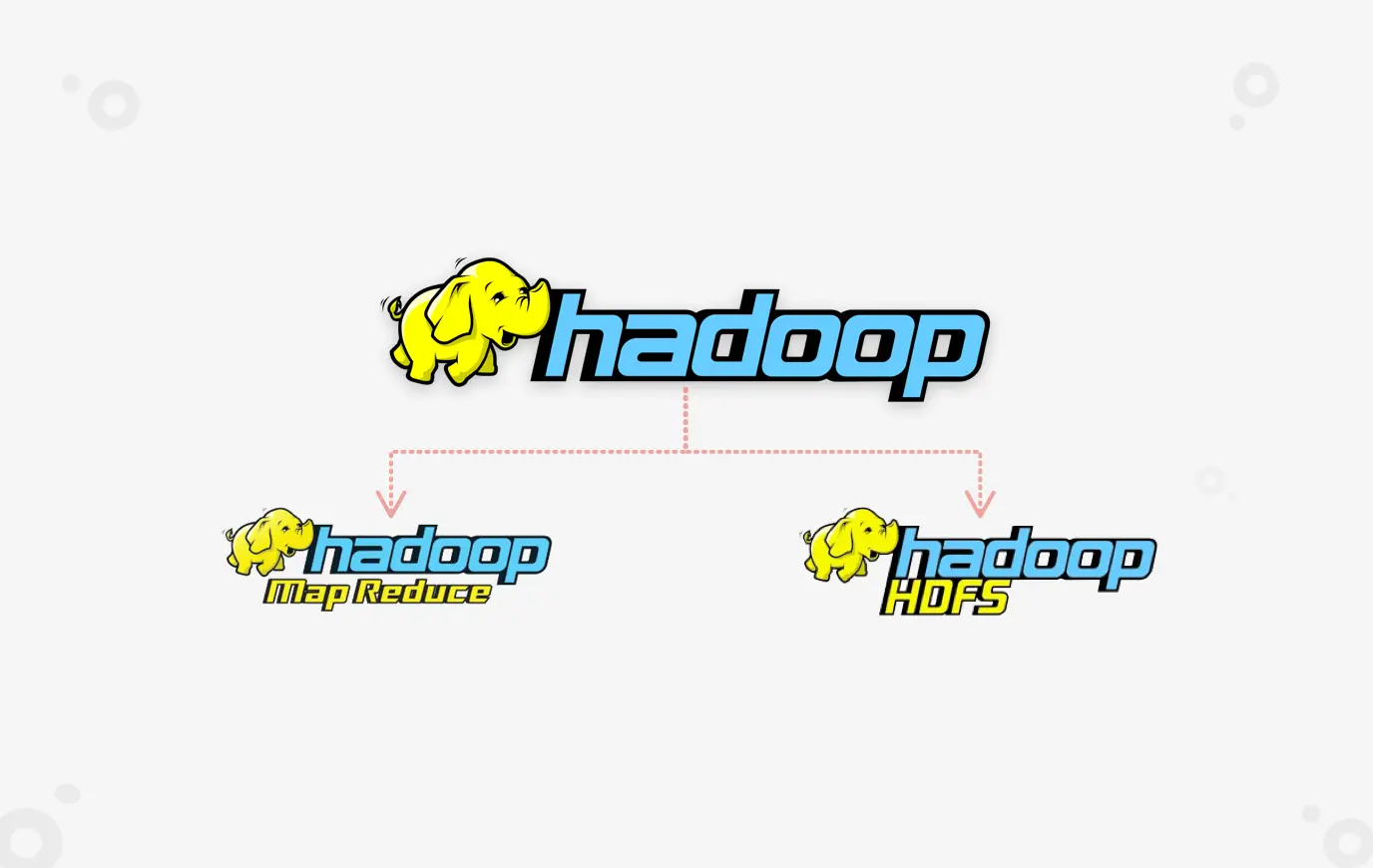

Hadoop is a lot of things to a lot of people, so it becomes essential to understand what you want Hadoop to do for you? Not because there may be things that Hadoop does not do too well – but because Hadoop is flexible enough to be tailored according to your defined business goals. Hadoop at its core is essentially two elements:

- Map Reduce

- Distributed File System HDFS

Put together, HDFS and Map Reduce are efficient to tackle the biggest of big data problems you throw at it. But it’s not a walk in the park. I would rather compare it to the route march on the 1st day of Special Forces training.

Hadoop Adoption becomes easy only when managed in phases. You need to first figure out what you want Hadoop to achieve for you. Once you’ve answered this question, you require a prioritized list of business use cases. Voila, you’re done! You have the most critical element answered: A clear direction of where you’re heading.

Once that’s set, what you need is a roadmap to get there. This roadmap will define the technology stack that needs to be used, identify the stakeholders for optimal success and build the social acceptability of Hadoop as a long term solution within the organization. Below I’ve segregated the roadmap into stages for better comprehension.

What to Explore

Stage 1: Understanding and Identifying Business Cases

As with every technology switch, the first stage is often understanding the new technology and tool stack as well as propagating the benefits that the end user and the organization sees. At this stage looking at your current system with a close eye helps to identify the business cases that are redundant or can be merged together to bring in only the things that matter. This also helps build a priority list of projects that reflect definite business use cases. You need to define the key indicators of success here. The performance and success criteria of the legacy system you are currently running need to be revamped completely as well. The business stakeholders are key here to refine the business SLAs.

Stage 2: Warming Up to the Technology Stack

Next up, bring in the technology stack for people to familiarize themselves with. Build a playground or a dirty development environment where developers and analysts can experiment and innovate without the fear of bringing down the business. This will allow the Data Modelers and DBAs to build the most optimal warehouse as well as enable the ETL developers to learn the pit falls. This will ensure they build the best practices before heading into full-fledged project work.

Stage 3: Converting the Old to New

A key element of Hadoop Adoption is running an efficient conversion of the old to new. Identify early on the dark or missing data elements, build coding standards and optimization techniques, automate as much as possible to reduce the conversion errors and validate against the legacy the correctness of the conversion. Bitwise recommends a Proof -> Pilot -> Production path to conversion where we nibble away at the legacy applications and build a repeatable framework before biting a big chunk of business requirement.

Stage 4: Maintenance and Support

Once in production, Hadoop needs what every production system in the world needs – maintenance and support. Things breakdown and undergo upgradation or deprecation. What is needed is a dedicated team to keep track of the Hadoop ecosystem. Besides the regular application production, a support team structure is required to ensure availability and reliability of the environment.

Backed by extensive experience and having worked with Fortune 500 companies, we at Bitwise have ensured a walk in the park for our clients adopting Hadoop and have unraveled effective usage of Hadoop to meet their ELT and Analytics needs. Have a look at our Excellerators and get to know how organizations worldwide are unlocking the real value of Hadoop with our proven methodology.

You Might Also Like

Data Security

Implementing Fine-Grained Data Access Control: A Complete Guide to GCP Column-Level Policy Tags

Learn More