Data Analytics and AI

Achieved 100% Process Accuracy with Ab Initio

Bitwise contributed to a Fortune 500 financial services company by building an enterprise-wide application using the most advanced special features and modern optimization techniques within Ab Initio. The process ingests near real-time transactional events (more than 5 million everyday), validates relevancy, qualifies for promotional campaigns, and pays out per defined business rules. The process not only ensures fast performance to meet stringent business SLA needs, but also commits to high processing accuracy and data quality

The Client

One of the largest, U.S-based financial services companies known for its reward programs and industry leading customer service (per a renowned customer satisfaction survey).

Client Challenges and Requirements

- The costly mainframe-based, legacy system needed to be redesigned using modern technologies to increase flexibility, which enable it to adapt quickly to changing business needs and make it easier to accommodate additional features without investing a lot in future design/coding. Additionally, the client needed the system to be usable across various business units in the form of an enterprise-wide standard application, as well as achieve higher performance ratings and be 100% accurate.

Bitwise Solution

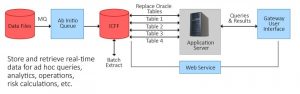

The Bitwise team helped design and develop a state-of-the-art batch process using Ab Initio products on client hosted UNIX-AIX grid servers, and implemented the following special features and techniques to meet the challenges:

BRE and ACE: Enables application flexibility, enhanced testing/simulation capability, increased automation, and mitigation of redundancy

ICFF (Index Compressed Flat Files): Allows faster access to individual records in case of large volume of data lookups.

Queues: A data structure that efficiently manages data exchanged between jobs running at different rates, ensuring zero data loss.

Key Driven Unloads: Helps faster unloads from large database tables using input key values, given the input data stream is also medium-to-large in size.

Merge SQL and Direct Load Techniques: Helps to load huge volumes of data quickly.

Key Results

Flexible design with future coding/testing efforts reduced up to 50%

100% process accuracy and 0% data leakage

More than 50% reduction in CPU cycles usage resulting high perform

More than 75% gain in run batch run times